This is akin to Warren Buffet’s (correct) contention that the most important task is to survive in the market, not necessarily to make the most profit possible.

That’s how I’ve always done it.

This is akin to Warren Buffet’s (correct) contention that the most important task is to survive in the market, not necessarily to make the most profit possible.

That’s how I’ve always done it.

Indeed. It’s quite the mystery.

I read Moby-Dick in third grade. Yes, I understood it all just fine and I also loved it. The Hobbit — if we were any kind of real civilization anymore — is more appropriate for normie 7-8-year-olds.

The Iran War Is Jeopardizing the Entire Global Economy.

America’s cost-of-living crisis is entering its most brutal phase.

The Disappearing American Mortgage.

Why MAGA suddenly loves solar power.

Morgan Stanley lays off 2,500 employees across divisions.

TerraPower gets OK to start construction of its first nuclear plant.

LexisNexis confirms data breach as hackers leak stolen files.

“Cyborg” Tissue Could Help Fast-Track Cures for Type 1 Diabetes.

U.S. Gasoline Demand Fell Further amid Long-Term Structural Shift: Plunging Per-Capita Consumption.

The future of many Western European nations (the UK, France, Spain, Germany) probably resembles Lebanon, or worse. Unless Muslims are evicted soon, it’s inevitable. It’s what Islam does as infiltration and takeover is what it is designed to do.

That has been inevitable for a while. It’ll also be accompanied by a ban on non-corporate VPNs.

The UK also has nukes, and will also likely be Islamicized. Yet another reason Poland needs nukes posthaste.

Aw, shit, I’ve read all of those. And one of them twice, though two different translations (Beowulf).

Guess I am a far-right extremist now!

That is extremely plausible. The time to do this would be when the US is distracted by Iran. I’d also expect China to attempt moves on Taiwan around the same time as Russia attempts to take the Baltic states.

It’ll be 2-3x that at least. That’s way off.

It’s more than that. They valorize the criminal and insane, while deploring the competent and valuable. Most had more empathy and kind words for Decarlos Brown than they did for Iryna Zarutska. And for that they should never receive forgiveness.

Only expatriation flights to Somalia.

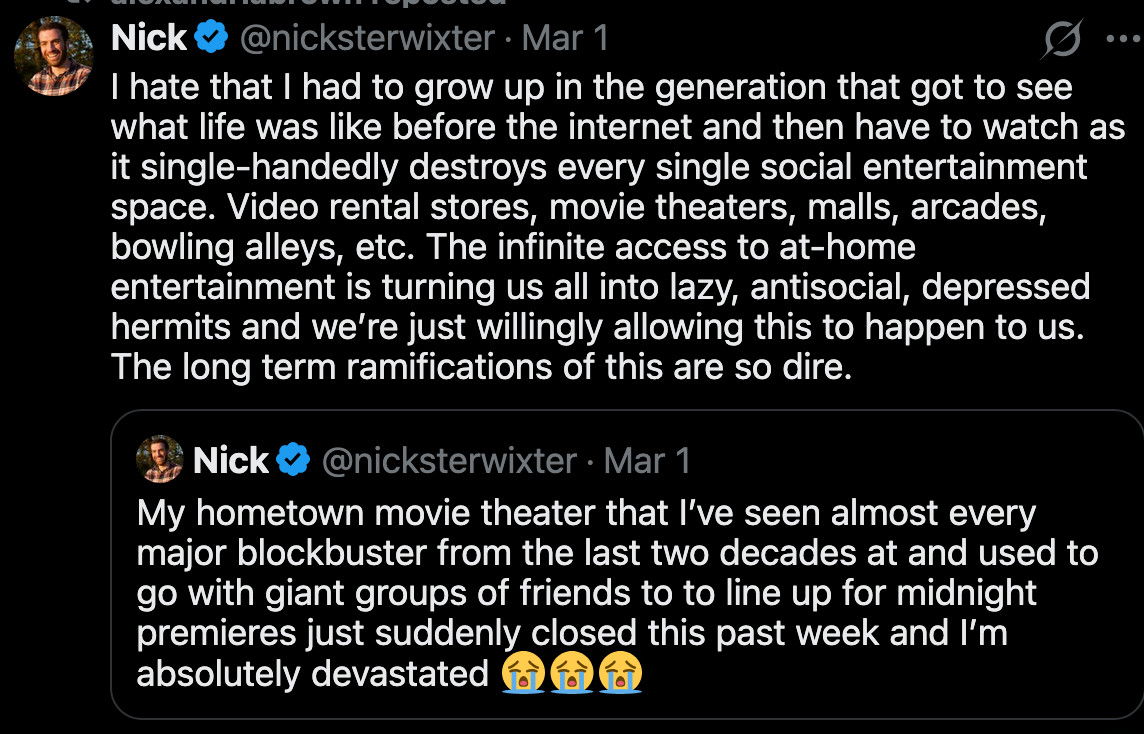

This is a terrible trend. Even my anti-social ass used to love to go to the arcade when I got the rare opportunity to do so as a kid. That we’ve just collectively decided to sit at home and scroll on phones is so, so evil.

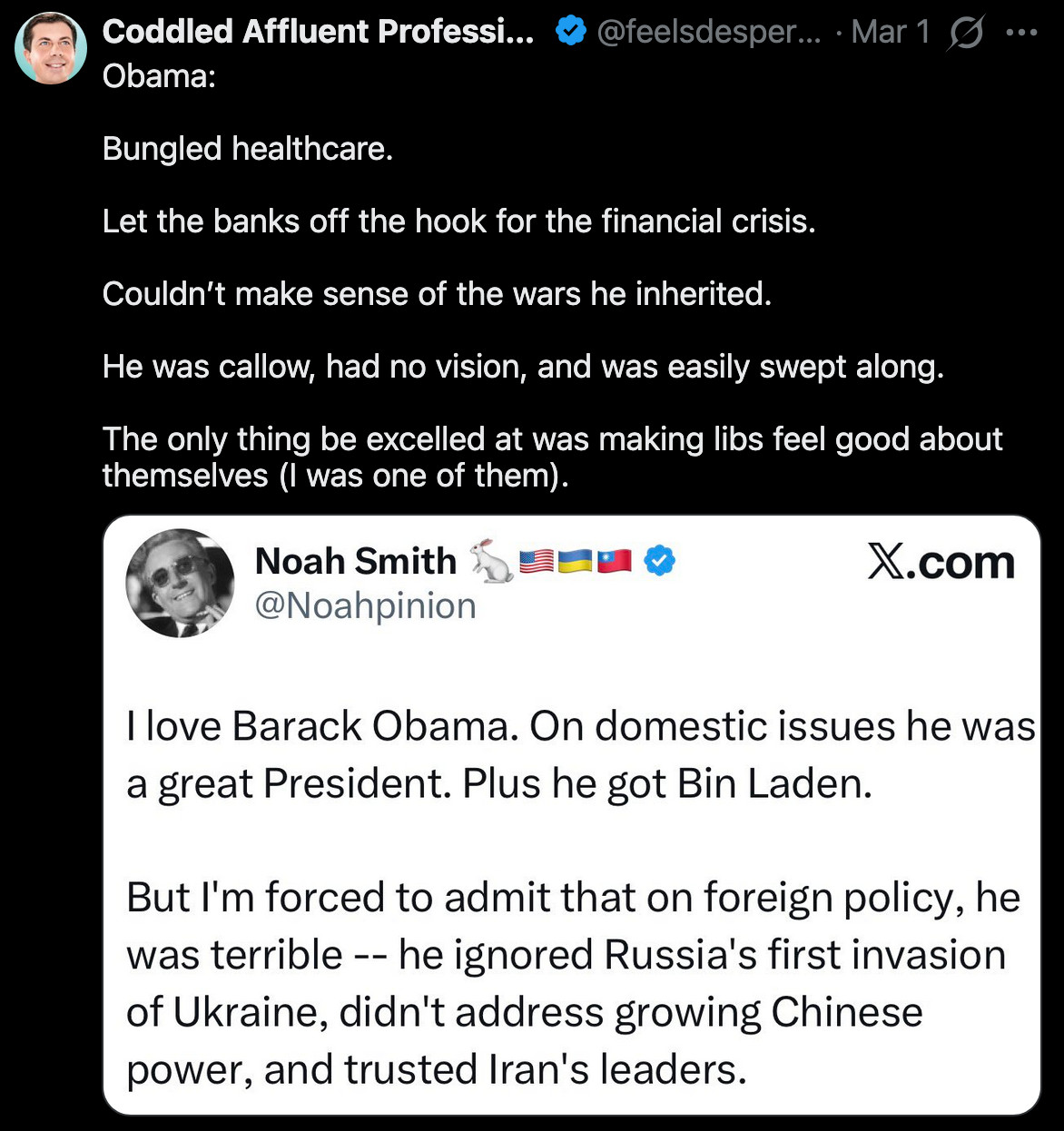

Obama was a terrible president who screwed up anything he touched — except for making a boatload of money right after he left office. That he excelled at, because it was his real goal anyway.

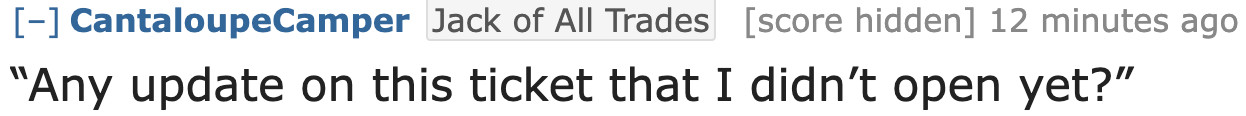

Describe working in IT to normies.

That’s perfect. I’ve had this happen numerous times in my career:

User/Manager: When will [major project] be completed?

Me: This is the first I’ve heard of this project. When was it scoped and what is the goal of the project? Who is running it?

User/Manager: Oh, I thought you knew. [Major Project] is due in a week. Your team should get rolling to meet the deadline!

Me: Looks like about six months of work even if we bust ass. Not gonna happen.

User/Manager: Why is IT always so useless?!?!?!

Not a good thing.